ktmidi, a Kotlin MPP Library for MIDI 1.0 and 2.0

I have been primarily hacking my hobby projects these days without writing any blog posts or announcements (in English here) but probably I should more often... I don't mostly because they are quite not solid. But today I have something I would eager to introduce than waiting for maturity.

Moving forward with Kotlin

I used to manage a C#/.NET library called managed-midi, which is technically my most known personal project. I use it quite extensively in my other projects. Some of them are essential tools in my creative life (if exists!)

Unfortunately, since my desktop is Linux and .NET becomes more and more "irrelevant" these years after Xamarin acquisition under Visual Studio organization (namely they totally killed MonoDevelop), .NET has become "not for me" and I was seeking for alternative development ecosystem.

There are couple of "requirements" for my new world:

- the framework and toolchain must be freely available

- runnable on Linux desktop and Android

- their primary development environment that majority of the developers use has to be free (ideally as in speech, but beer is acceptable)

- runs within vscode extension, ideally without pain (e.g. separate runtime installation)

- ideally, can be embedded/hosted in native code

Most of the choices of languages and frameworks work, but .NET apparently does not meet the third item.

I also looked for MIDI libraries from others as well, particularly in C++. libremidi, a successor of RtMidi + ModernMIDI, is the closest of my need. But I ended up to stick to my design because I would need extensive MIDI access API, just like how I used in in my "FluidsynthMidiAccess" implementation (it makes use of fluidsynth API, not platform API). I believe we can do that in juce_audio_devices too, but I don't want to bring in JUCE-isms in my code. I need everything in MIT license alike.

After all my primary target platform has been Android, so I ended up to migrate things to Kotlin, and ktmidi project is newly launched.

Kotlin has been becoming extensively adopted and now it supports JVM, platform native, and Javascript. Wasm is going to join the family as well (which used to be part of native, but it seems better to have it in separate hedge). Maybe not ideal yet, but it would be sufficient for me so far.

Code migration itself was actually done way earlier when I ported my C# fluidsynth-midi-device-service to Kotlin fluidsynth-midi-service-j, but it was not meant for general consumption at that time. There is not a lot of interesting bits on converting C# code to Kotlin - Kotlin code looks nicer in general, except for one cumbersome issue... general lack of "unsigned" integers from the first citizens. There are bunch of extraneous integer type casts that make code non-intuitive and buggy a lot due to surprising value ranges and comparisons.

Basic ktmidi features

ktmidi aims to become a general purpose MIDI manipulation utility across platforms as a Kotlin Multiplatform library. It has three primary purposes:

MidiAccess: provides ways to access platform MIDI devices inputs and outputs through cross-platform API, like W3C Web MIDI API, but supports creating virtual MIDI ports too (if possible on the platform).MidiMusic: provides functionality to read, construct, and write Standard MIDI Files (SMF) or just structure without filesMidiPlayer: provides music playback feature. Just an event-emitter, not "real time".

This post is not a documentation, so I skip how you import the packages via Maven Central. But I would show you how they can be used (example in JVM):

// for some complicated reason we don't have simple "default" MidiAccess API instance

val access = if(File("/dev/snd/seq").exists()) AlsaMidiAccess() else JvmMidiAccess()

val bytes = Files.readAllBytes(Path.of(fileName)).toList()

val music = MidiMusic()

music.read(bytes)

val player = MidiPlayer(music, access)

player.play()

These were the primary features available at managed-midi too. Though at this state, ktmidi is somewhat featureless than managed-midi especially in MIDI Access... for example, we don't have iOS native CoreMIDI support yet. In fact native iOS build is disabled in the meantime because it is unable to build it under ubuntu-20.04 GitHub Actions environment. Building comprehensive platform API would be actually a lot of work... unlike .NET, you cannot implement platform API on Kotlin/JVM (JNI), Kotlin/Native (cinterop) and even Kotlin/JS (node-ffi) at the same time. MacOS is still kind of supported via "JVM" implementation (which uses javax.sound.midi API), but it lacks some features.

One of a minor but important features for me is capability to create virtual MIDI ports. With managed-midi, I used it in my software MIDI keyboard application that can be then used directly as a MIDI input device in DAWs, without routing hustle. On ktmidi, this feature is available only on Linux ALSA so far, but potentially implementable on macOS, probably easier with kotlin-native than JVM.

MIDI 2.0 UMPs over virtual MIDI ports

Porting managed-midi as ktmidi was only the first step. I have ported some of my existing C#/.NET apps to ktmidi. And I started to think that I should go further beyond what managed-midi did. Thus, I ended up to add experimental support for MIDI 2.0.

For those who are not familiar, MIDI 2.0 specification was released early last year, after 40-ish years since MIDI 1.0 specification was finalized. There is no 16 channels limitation anymore, parameters can be 32-bit long, timer got much more precise, and there are interactive channels between host and client etc. etc. It comes with a new MIDI message format called UMP (Universal MIDI Packet), and can work optionally on MIDI 1.0 compatibility, using a sysex-based bidirectional protocol called MIDI-CI (Capability Inquiry).

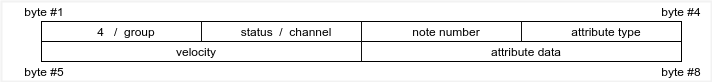

UMPs are cool. Everything is represented in either of 16, 32, 64, or 128 bits of integers, so no system exclusive messages of unknown lengths anymore. No need for awkward memory allocation in audio processing loops! And "JR Timestamp" works to represent message duration as a single UMP itself (up to 2sec. but they can be multiple). Here is an example diagram (from my Japanese book "MIDI 2.0 UMP Guidebook"), on how MIDI 2.0 note on/off message looks like. 256 channels are split into 0 to 15 "groups" with 0 to 15 channels for each group.

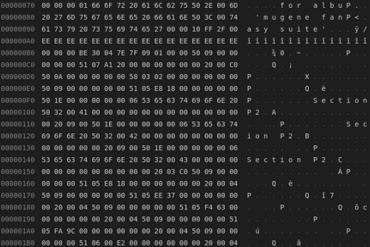

Here is how UMP binaries look like (it also contains some non-standard bits too). You'll find it much more readable than SMF whose message length varies.

Adoption of MIDI 2.0 on the audio products in the industry is, however, not very active yet. It is kind of chicken-and-egg situation where almost no MIDI 2.0 devices are out today and almost no DAWs support MIDI 2.0 devices, under general unavailability of MIDI 2.0 support in major operating systems and platforms. The only exception is the latest macOS and iOS (maybe a valid assertion only until next WWDC 2021), which supports UMPs and MIDI-CI in CoreMIDI API.

Under such situation, can we even implement "MIDI 2.0 support" in cross-platform manner? Yes, partially. I didn't implement anything for MIDI-CI so far, because it is actually a set of application protocols between host and client using system exclusive messages that can be transmitted even on MIDI 1.0 inputs and outputs.

What I have implemented is mostly support for UMPs in my API. Would that work on platform MidiAccess API? The answer is still "most likely yes", since the MIDI access API is implemented to only transmit stream of untypes bytes. If the platform API does not intercept the actual stream bytes, then they can be whatever host and client want. It is a cheat, the "ordinal" way would be to use MIDI-CI, but it is not really mandatory if both host app and client app "agree". A pair of "MIDI 2.0 only" apps does not need protocol negotiation.

But are there such MIDI I/O devices after all? Most unlikely phisically. But remember what I expressed as "minor but important" feature? We can create virtual MIDI devices! I can create my own virtual MIDI devices, and they can be implemented to understood only MIDI 2.0 UMPs(!)

And it is quite easy to downconvert UMPs to MIDI 1.0 messages (whenever number of channels and value ranges do not matter). So, before we get actual MIDI 2.0 devices, we can experiment with "virtual" MIDI 2.0 transmits.

There are actually software MIDI synthesizers such as Fluidsynth, and they support part of MIDI 2.0 features (as expressed on the GitHub issue). Then we can directly send UMPs to such MIDI outputs, if we can detect it.

I have another experimental project that transmits MIDI 2.0 UMPs over Android MidiDeviceService but not to introduce today... I will write another post once it's ready.

(I also have a C project called cmidi2 too, but the scope of the project is limited to UMP generation and interpretation for real-time uses. I do use it in the project above.)

Building a MIDI 2.0 ecosystem

Now I can technically consume UMPs in my apps, but there is almost no product that can generate them. What would we need to build a basic MIDI 2.0 ecosystem? Here is a list of my ideas:

- MIDI editors (typically DAWs) that can receive UMPs from MIDI 2.0 inputs

- MIDI input devices (can be software) that can send UMPs

- MIDI generator that produces music in UMPs

- MIDI player that can read and play music in UMPs

In fact, there is no corresponding MIDI 2.0 specification to SMF yet. It is said under active development. However since SMF structure is quite simple and there isn't any additional requirement to support UMPs in SMF-like formats, we can simply come up with our own (hopefully temporary) format so far. Multirack Studio supports MIDI 2.0 in its own format too. Representation of time interval is different story, and so far I ended up to just reuse the concepts used in SMF, replacing "JR Timestamp" values with SMF-compatible "ticks".

For generating UMP music files I have my own way. Since ktmidi was essentially port of managed-midi to Kotlin and my plan was to port my significant bits to the new development environment, there were already a handful of example API use cases for ktmidi. Not everything is ported, but my code migration can be slowly done. And one of those tools is an MML (Music Macro Language) compiler, which I name it mugene-ng. (I kind of want to rename it as I haven't really determined how to pronounce it and leave it like gods in Cthulhu...)

Back in 2019, I wrote a blog post about an instant music creation activity, and I explained a bit about MML, noting that I used it for daily composition aid. To show you how MMLs look like, I would just copy-paste from my old post:

// Trombone -----------------

_ 1 CH1 @57 V100 o4 l16 GATE_DENOM8 Q7 RSD60 CSD40 DSD40 BEND_CENT_MODE24 P74

A r2.g4>c4g4 d2.e-4f4b-4 a2.g8agf4.c8 f1

K-5c4d4

e-2.c4g4e-4 f2.f4b-4g4> c1^8r8<br>cr d1.

To be honest it is kind of exaggeration nowadays as I don't have newly composed songs since then (I'm mostly on hacking code), but with MML I can still easily create MIDI messages, and I add it on my virtual MIDI keyboard apps. Since the keyboard app can work as a virtual MIDI inputs, I can send any phrases to DAWs using MML. No skill to deal with physical MIDI keyboard is needed(!)

And now, it is implemented with Kotlin and MIDI 2.0 support. It is the UMP generator I can use. The compiler was basically implemented to simply generate MIDI raw bytes by "macros", and I didn't really need a lot of effort as to support UMPs. In MIDI 2.0 mode it only generates MIDI 2.0 messages instead of traditional MIDI 1.0 bytes. It is super useful with the virtual MIDI keyboard app for further UMP experiment with the "receivers".

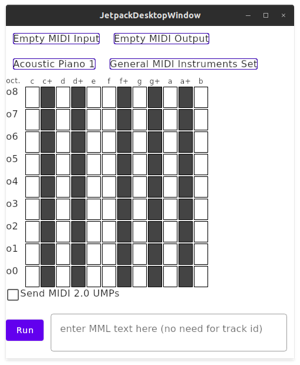

Here is an sshot from my experimental MIDI keyboard app written in Jetpack Compose running on the desktop (Jetpack Compose partially runs on Desktop and Web these days).

I would need more code migration too, namely vscode extension support for the MML compiler and ntracktive, Tracktion music generator from SMF and JUCE AudioPluginHost plugingraphs, but things are in general looking promising.

In any case, I believe this library is going to be useful for Kotlin app developers who want to support MIDI 1.0 and 2.0 features, just like how managed-midi was so. If you have created any interesting apps please let me know.